Kubernetes

Kubernetes is an open-source platform that is widely used for container orchestration. It is designed to automate containerized applications' deployment, scaling, and management. Kubernetes can be used to manage and run applications in various environments, including public, private, and hybrid clouds. Kubernetes provides a rich set of features that help control the complexity of deploying and managing containerized applications at scale. In this blog, we will explain the Kubernetes architecture and its components

Architecture of Kubernetes

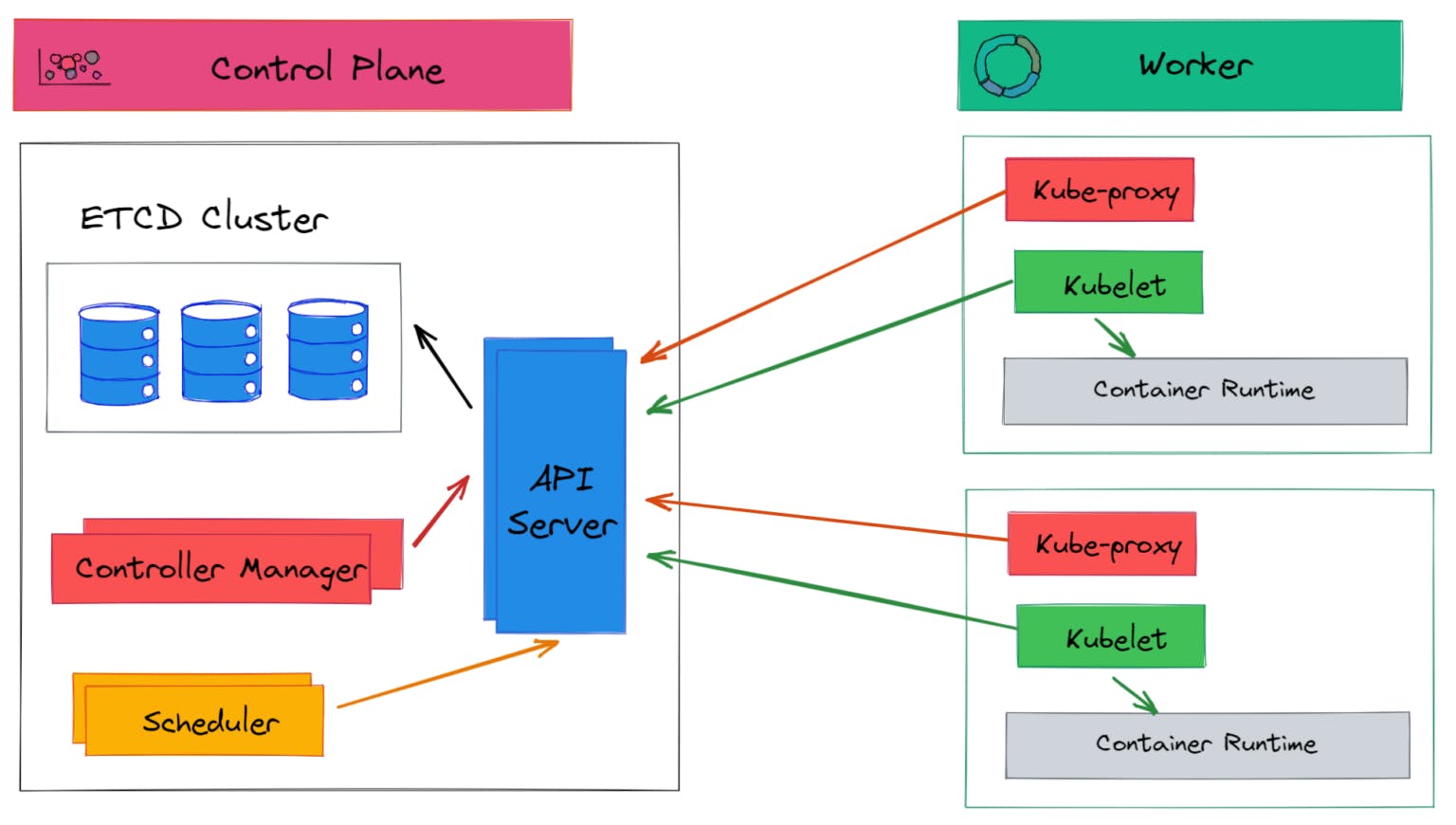

The Kubernetes architecture consists of two main components:

Control Plane Components

Worker Node Components

Control Plane Components:

Master components are the control plane components of Kubernetes that manage the Kubernetes cluster.

The master components include:

💡Kubernetes API Server:

Kubernetes API Server is the brain behind all the operations in a Kubernetes cluster.

It is the primary management point for the Kubernetes cluster and is responsible for validating and processing API requests.

It intercepts RESTful calls from users, administrators, developers, operators, and external agents, then validates and processes them.

💡etcd:

etcd is a distributed key-value store that stores the configuration data of the Kubernetes cluster.

It is used to store the state of the cluster, including the state of all objects (pods, services, deployments, etc.).

💡 Kube-Controller Manager:

The controller manager is the component of the Kubernetes control plane node that regulates the state of the Kubernetes cluster by running controllers or operators.

Controllers are watch-loop processes that compare the cluster's desired state with the cluster's actual state. The actual state is stored in the etcd store.

💡 Scheduler:

The main role of the scheduler is to assign the pods to the nodes.

Let's suppose, you request the API server via the command line mentioning running a pod (or a container). That request will be received and forwarded to the scheduler after authentication, authorization & admission control policy so that the scheduler can find the best node (worker node) to run a pod inside of it

The scheduler determines the valid nodes for the placement of a node in the scheduling queue, ranks each node based on resources available and required, and then binds the pod to a specific node.

💡 Cloud Controller Manager(CCM):

The CCM is responsible for running the controllers or operators to interact with the underlying infrastructure of a cloud host provider when nodes become unavailable.

It provides a way to integrate with the cloud provider's APIs to manage the cloud resources.

Worker Node Components:

💡 Container Runtime:

Although Kubernetes is regarded as a “container orchestrating tool” it cannot run containers directly.

The Container Runtime Engine is responsible for running the containers on the node.

Kubernetes supports several container runtimes, including Docker, CRI-O, and contained.

💡 Kubelet:

Kubelet is one of the main components of Kubernetes responsible for managing individual nodes and their containers.

To run a pod that is present on a worker node, there should be some component that must communicate with the control plane to run a pod. Kubelet is that component

The Kubelet of each node interacts with the control plane and waits for the order from the API server to run a Pod. Once the kubelet of a node receives the orders from the API server, it interacts with the container runtime of its node through a plugin-based interface (CRI shim).

In case you got confused, here's the summary of the internal working of Kubernetes components: Don't miss this!

The user sends a request to the API server to start a Pod.

This request is now being validated by the API server.

API server forwards this request to the scheduler on the control plane.

In return, Scheduler requests cluster-related information from the API server since the API server is the only component that can interact with etcd store.

API after receiving this request from the scheduler reads the data from the etcd store and provides it to the scheduler.

The scheduler after receiving the information assigns a pod to a node based on the information and conveys this message to the API server.

"Hey API server, the pod should run on node-01"

- scheduler

API server assigns a specific node's kubelet to start a pod.

On receiving the orders from the API server, kubelet of that node interacts with the container runtime via CRI shim and now a pod has started running on a specific node. While the Pod is running, the controller manager checks whether the desired state of the cluster is in matches the actual state of the Kubernetes cluster.

💡 Kube-proxy

The kube-proxy component is responsible for managing the network proxy between the Kubernetes services and the pods that are running on the worker nodes.

The kube-proxy uses various networking modes to ensure that the communication between the pods and services is efficient and reliable.

If you've read up to here, Congratulations!🎉